Unlock Python’s Power: Enhance Your Code with Concurrency and Parallelism

How to Enhance Your Python Code With Concurrency and Parallelism – In the realm of coding, Python shines as a versatile tool. But what if you could unleash its true potential by harnessing the power of concurrency and parallelism? This guide will take you on an exciting journey to explore how these techniques can transform your Python code, unlocking new levels of efficiency and performance.

From understanding the fundamentals of multiprocessing and multithreading to delving into the nuances of asynchronous programming, we’ll cover it all. Along the way, you’ll discover practical examples, best practices, and insider tips to help you master the art of concurrent Python programming.

Multiprocessing

Multiprocessing is a Python module that allows you to create and manage multiple processes simultaneously. It’s a powerful tool that can significantly enhance the performance of your Python code, especially when dealing with computationally intensive tasks.

Multiprocessing works by creating a separate Python interpreter for each process, which means that each process has its own memory space and can run independently of the others. This allows you to take advantage of multiple cores or processors on your computer, which can lead to significant speedups.

Benefits of Multiprocessing

- Improved performance for computationally intensive tasks

- Increased scalability by utilizing multiple cores or processors

- Improved responsiveness of your application by offloading tasks to separate processes

Limitations of Multiprocessing

- Increased memory usage due to each process having its own memory space

- Potential for race conditions and other concurrency issues

- More complex code structure compared to single-threaded code

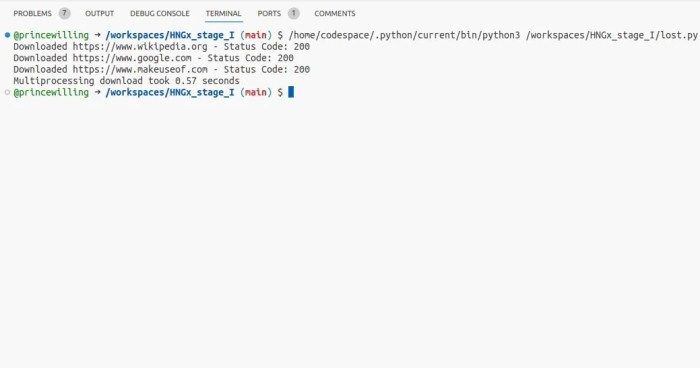

Implementing Multiprocessing in Python

To implement multiprocessing in Python, you can use the multiprocessingmodule. This module provides a number of classes and functions for creating and managing processes.

The most basic way to create a process is to use the Processclass. The Processclass takes a target function as an argument, which is the function that you want to run in the new process.

import multiprocessing

def worker(num):

print(f'Worker: num')

if __name__ == '__main__':

jobs = []

for i in range(5):

p = multiprocessing.Process(target=worker, args=(i,))

jobs.append(p)

p.start()

Multithreading: How To Enhance Your Python Code With Concurrency And Parallelism

Multithreading is a technique that allows a single Python program to execute multiple tasks concurrently. It involves creating multiple threads, which are independent execution paths within a single program, allowing different parts of the code to run simultaneously.

Multithreading enhances the performance of Python code by utilizing multiple cores or processors, leading to faster execution times for computationally intensive tasks.

Benefits of Multithreading

- Improved Performance:Multithreading enables parallel execution of tasks, significantly improving the overall performance of the program, especially for tasks that can be broken down into independent subtasks.

- Increased Responsiveness:Multithreading allows the program to handle multiple requests or events concurrently, enhancing the user experience and responsiveness of the application.

- Efficient Resource Utilization:By utilizing multiple threads, multithreading ensures optimal utilization of the available hardware resources, such as multiple cores or processors.

Limitations of Multithreading

- Complexity:Managing multiple threads can introduce complexity into the code, requiring careful synchronization and coordination to avoid race conditions and deadlocks.

- Overhead:Creating and managing threads incurs some overhead, which can impact the performance of the program, especially for short-lived or frequently created threads.

- Shared Resources:When multiple threads share the same resources, such as global variables or objects, it becomes essential to implement proper synchronization mechanisms to prevent data corruption or unexpected behavior.

Concurrency and Parallelism

Concurrency and parallelism are two important concepts in computer science that can be used to improve the performance of Python code. Concurrency allows multiple tasks to run at the same time, while parallelism allows multiple tasks to be executed simultaneously.

Concurrency

Concurrency is the ability of a program to run multiple tasks at the same time. This can be achieved by using threads, which are lightweight processes that can run independently of each other. Threads share the same memory space, so they can communicate with each other and share data.

Parallelism

Parallelism is the ability of a program to execute multiple tasks simultaneously. This can be achieved by using multiple processors or cores. Parallel tasks do not share the same memory space, so they cannot communicate with each other or share data.

Benefits of Concurrency and Parallelism

Concurrency and parallelism can provide a number of benefits, including:

* Improved performance: Concurrency and parallelism can help to improve the performance of Python code by allowing multiple tasks to run at the same time. This can be especially beneficial for tasks that are computationally intensive. – Increased scalability: Concurrency and parallelism can help to increase the scalability of Python code by allowing it to run on multiple processors or cores.

This can be especially beneficial for applications that need to handle a large number of users or requests. – Reduced latency: Concurrency and parallelism can help to reduce the latency of Python code by allowing multiple tasks to run at the same time.

This can be especially beneficial for applications that need to respond to requests quickly.

Limitations of Concurrency and Parallelism

Concurrency and parallelism also have some limitations, including:

* Increased complexity: Concurrency and parallelism can increase the complexity of Python code, making it more difficult to write and debug. – Potential for race conditions: Concurrency and parallelism can lead to race conditions, which occur when multiple threads or tasks try to access the same resource at the same time.

– Increased memory usage: Concurrency and parallelism can increase the memory usage of Python code, as each thread or task requires its own stack space.

Choosing Between Concurrency and Parallelism

The choice between concurrency and parallelism depends on the specific needs of the application. Concurrency is best suited for applications that need to perform multiple tasks at the same time, but do not need to execute them simultaneously. Parallelism is best suited for applications that need to perform multiple tasks simultaneously, and can benefit from using multiple processors or cores.

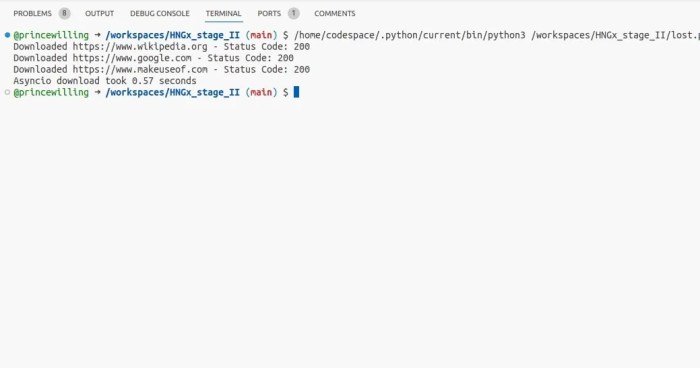

Asynchronous Programming

Asynchronous programming is a technique that allows Python code to execute tasks concurrently without blocking the main thread. This is achieved by using non-blocking I/O operations, which means that the program can continue to run while waiting for I/O operations to complete.

Asynchronous programming is particularly useful for applications that need to handle a large number of concurrent requests, such as web servers or network applications. It can also be used to improve the performance of applications that perform I/O-intensive tasks, such as reading or writing to files.

Benefits of Asynchronous Programming

- Improved performance: Asynchronous programming can improve the performance of Python code by allowing it to handle multiple tasks concurrently without blocking the main thread.

- Increased scalability: Asynchronous programming can help to scale Python applications to handle a larger number of concurrent requests.

li>Improved responsiveness: Asynchronous programming can make Python applications more responsive by allowing them to continue to run while waiting for I/O operations to complete.

Limitations of Asynchronous Programming

- Increased complexity: Asynchronous programming can be more complex to implement than synchronous programming.

- Potential for race conditions: Asynchronous programming can introduce race conditions, which can occur when multiple threads access the same data at the same time.

- Limited support: Asynchronous programming is not supported by all Python libraries and frameworks.

Threading vs. Multiprocessing

Threading and multiprocessing are two approaches to concurrency and parallelism in Python. Threading creates multiple threads within a single process, while multiprocessing creates multiple processes.

Key Differences

- Resource Sharing:Threads share the same memory space, while processes have their own separate memory space.

- Overhead:Creating threads is less expensive than creating processes.

- Synchronization:Threads require additional synchronization mechanisms to avoid race conditions, while processes do not.

- GIL:The Global Interpreter Lock (GIL) in Python limits the number of threads that can execute Python code concurrently to one. This can impact the performance of threading in some cases.

Choosing Between Threading and Multiprocessing

The choice between threading and multiprocessing depends on the specific requirements of the application.

- Threading is suitable for:

- CPU-bound tasks that do not require access to shared resources.

- Applications where the overhead of creating processes is significant.

- Multiprocessing is suitable for:

- I/O-bound tasks or tasks that require access to shared resources.

- Applications where the GIL is a bottleneck.

- Tasks that can be easily parallelized.

Process Pools

Process pools are a great way to enhance the performance of your Python code by distributing tasks across multiple processes. This can be especially beneficial for tasks that are computationally expensive or that can be easily parallelized.

To create a process pool, you can use the `multiprocessing` module. The `Pool` class in this module allows you to create a pool of worker processes that can execute tasks in parallel.

Creating a Process Pool

To create a process pool, you can use the following code:

import multiprocessing

# Create a pool of 4 worker processes

pool = multiprocessing.Pool(4)

The `Pool` class takes a single argument, which is the number of worker processes to create. In this example, we are creating a pool of 4 worker processes.

Using a Process Pool

Once you have created a process pool, you can use it to execute tasks in parallel. To do this, you can use the `apply()` method of the `Pool` class. The `apply()` method takes two arguments: the function to be executed and the arguments to the function.

# Apply the `sum` function to the list of numbers in parallel

result = pool.apply(sum, [1, 2, 3, 4, 5])

The `apply()` method will return the result of the function. In this example, the result will be the sum of the numbers in the list, which is 15.

Benefits of Using Process Pools

There are several benefits to using process pools:

- Improved performance: Process pools can improve the performance of your code by distributing tasks across multiple processes. This can be especially beneficial for tasks that are computationally expensive or that can be easily parallelized.

- Increased scalability: Process pools can be easily scaled up or down to meet the needs of your application. This makes them a good choice for applications that need to handle a varying workload.

- Simplified code: Process pools can simplify your code by allowing you to write code that is more concise and easier to read.

Limitations of Using Process Pools

There are also some limitations to using process pools:

- Increased memory usage: Process pools can increase the memory usage of your application. This is because each process in the pool has its own copy of the Python interpreter and the data that is being processed.

- Potential for deadlocks: Process pools can lead to deadlocks if the tasks that are being executed are not properly synchronized.

- Not suitable for all tasks: Process pools are not suitable for all tasks. For example, tasks that require access to shared resources or that cannot be easily parallelized are not good candidates for process pools.

Task Queues

Task queues are a powerful tool for enhancing Python code by allowing you to distribute tasks across multiple workers. This can lead to significant performance improvements, especially for CPU-intensive tasks.

In Python, there are several popular task queue libraries available, such as Celery, RQ, and APScheduler. These libraries provide a variety of features to help you manage and execute tasks, including support for scheduling, retries, and error handling.

Benefits of Using Task Queues

- Improved performance: By distributing tasks across multiple workers, task queues can significantly improve the performance of your Python code.

- Scalability: Task queues can be easily scaled to handle increasing workloads by adding more workers.

- Reliability: Task queues provide built-in support for retries and error handling, which can help to ensure that your tasks are executed reliably.

Limitations of Using Task Queues

- Increased complexity: Task queues can add complexity to your Python code, especially if you are not familiar with the underlying concepts.

- Potential for race conditions: If you are not careful, task queues can introduce race conditions into your code.

Concurrency Frameworks

Concurrency frameworks are software libraries that provide tools and abstractions for writing concurrent and parallel code. They offer features such as thread pools, task queues, and synchronization primitives, making it easier to develop and manage concurrent applications.

Popular Concurrency Frameworks for Python

*

-*asyncio

An asynchronous I/O framework that supports coroutines and event loops, making it suitable for building high-performance network and web applications.

-

-*multiprocessing

A module that provides support for creating and managing multiple processes, each with its own memory space and resources.

-*concurrent.futures

A module that offers a high-level interface for executing tasks concurrently, using either threads or processes.

-*Celery

A distributed task queue that allows you to offload tasks to worker processes, enabling efficient task scheduling and parallelism.

-*Twisted

A mature and feature-rich framework that provides a comprehensive set of tools for building concurrent and asynchronous applications.

Benefits of Using Concurrency Frameworks

*

-*Improved performance

Concurrency frameworks allow you to distribute tasks across multiple cores or processes, maximizing hardware resources and reducing execution time.

-

-*Scalability

By leveraging concurrency, you can scale your applications to handle increased workload without compromising performance.

-*Responsiveness

Asynchronous frameworks like asyncio enable you to build responsive applications that can handle multiple requests concurrently without blocking.

-*Simplified development

Concurrency frameworks provide high-level abstractions that simplify the process of writing concurrent code, reducing development time and effort.

Example: Using asyncio for Asynchronous I/O

“`pythonimport asyncioasync def main(): # Create an asyncio event loop loop = asyncio.get_event_loop() # Define a coroutine that simulates a long-running task async def task(name): print(f”Starting task name”) await asyncio.sleep(1)

# Simulate a 1-second delay print(f”Task name completed”) # Create and schedule multiple tasks concurrently tasks = [task(f”Task i”) for i in range(5)] await asyncio.gather(*tasks)

# Close the event loop loop.close()if __name__ == “__main__”: asyncio.run(main())“`This example demonstrates how asyncio can be used to execute multiple tasks concurrently, improving the responsiveness and efficiency of your application.

Performance Considerations

Concurrency and parallelism can significantly enhance the performance of Python code, but it’s crucial to be aware of potential pitfalls and performance considerations.

Common Pitfalls, How to Enhance Your Python Code With Concurrency and Parallelism

* GIL (Global Interpreter Lock):In CPython, the default Python interpreter, only one thread can execute Python bytecode at a time, limiting parallelism.

Thread Overhead

Creating and managing threads can introduce overhead, especially for short-lived tasks.

Deadlocks

Improper synchronization can lead to deadlocks, where threads wait indefinitely for resources.

Race Conditions

Concurrent access to shared resources can cause unpredictable results if not properly synchronized.

Best Practices

* Use Process-Based Parallelism:For CPU-intensive tasks, consider using multiprocessing instead of multithreading to avoid the GIL.

Optimize Thread Creation

Minimize thread creation and reuse existing threads whenever possible.

Avoid Unnecessary Synchronization

Only synchronize when necessary to prevent performance bottlenecks.

Use Thread Pools

Manage threads efficiently by using thread pools to avoid thread creation overhead.

Benchmarking and Profiling

To optimize performance, it’s essential to benchmark and profile concurrent Python code. Use tools like the `timeit` module for simple benchmarking and `cProfile` for more detailed profiling.* Benchmarking:Compare different approaches and identify bottlenecks by measuring execution times.

Profiling

Analyze the performance of code, identifying hotspots and areas for improvement.

Debugging Concurrent Code

Debugging concurrent code can be challenging due to the complex interactions between multiple threads or processes. This section explores the challenges of debugging concurrent code, provides techniques and tools for debugging Python code, and discusses common debugging pitfalls and how to avoid them.

A key challenge in debugging concurrent code is the non-deterministic nature of its execution. The order of execution of different threads or processes can vary, making it difficult to reproduce and debug errors. Additionally, race conditions, where the outcome of a program depends on the timing of thread execution, can be particularly difficult to track down.

Techniques and Tools

To debug concurrent code, several techniques and tools can be used. Logging and print statements can be added to trace the execution of different threads or processes, helping to identify the source of errors. Debugging tools such as pdb (Python debugger) and gdb (GNU debugger) can be used to step through the execution of code, allowing for the inspection of variables and the state of the program.

Common Pitfalls, How to Enhance Your Python Code With Concurrency and Parallelism

Common debugging pitfalls in concurrent code include:

- Race conditions: When multiple threads or processes access shared resources without proper synchronization, leading to unexpected results.

- Deadlocks: When multiple threads or processes wait for each other to release locks, resulting in a state where no progress can be made.

- Livelocks: When multiple threads or processes repeatedly attempt to acquire locks but never succeed, leading to a state where no progress can be made.

To avoid these pitfalls, proper synchronization techniques should be used to ensure that shared resources are accessed safely and that deadlocks and livelocks are prevented.

Case Studies

Real-world applications demonstrate the practical benefits of concurrency and parallelism in Python code. These case studies provide valuable insights into the challenges and benefits of using these techniques, along with lessons learned and best practices.

Scientific Computing

- Challenge:Performing complex scientific simulations requiring extensive computations.

- Solution:Using parallelism to distribute computations across multiple processors, significantly reducing execution time.

- Benefits:Increased computational speed, allowing for more complex and detailed simulations.

- Lesson Learned:Careful optimization is crucial to ensure efficient parallel execution and avoid potential bottlenecks.

Web Development

- Challenge:Handling multiple user requests concurrently to improve website responsiveness.

- Solution:Implementing concurrency to process requests simultaneously, allowing for faster response times.

- Benefits:Improved user experience, increased website traffic capacity, and reduced server load.

- Lesson Learned:Proper synchronization mechanisms are essential to prevent data corruption and ensure thread safety.

Data Processing

- Challenge:Analyzing large datasets efficiently, requiring parallel processing to handle massive amounts of data.

- Solution:Using multiprocessing to create multiple worker processes that process data concurrently.

- Benefits:Reduced processing time, enabling faster insights and decision-making.

- Lesson Learned:Task distribution and synchronization are critical to ensure efficient parallel data processing.

Outcome Summary

As you embark on this adventure, you’ll not only enhance your Python skills but also gain a deeper understanding of how to optimize your code for maximum efficiency. Embrace the power of concurrency and parallelism, and watch your Python code soar to new heights!

Quick FAQs

Q: What’s the key difference between concurrency and parallelism?

A: Concurrency allows multiple tasks to run simultaneously, while parallelism executes them truly in parallel, utilizing multiple cores or processors.

Q: Can I use both multiprocessing and multithreading in my Python code?

A: Yes, you can combine both techniques, but it’s crucial to carefully consider the specific requirements of your application to avoid potential issues.

Q: How can I debug concurrent Python code effectively?

A: Debugging concurrent code requires specialized techniques and tools. Consider using debuggers like pdb or ipdb, leveraging logging and tracing mechanisms, and employing visualizers to gain insights into the execution flow.